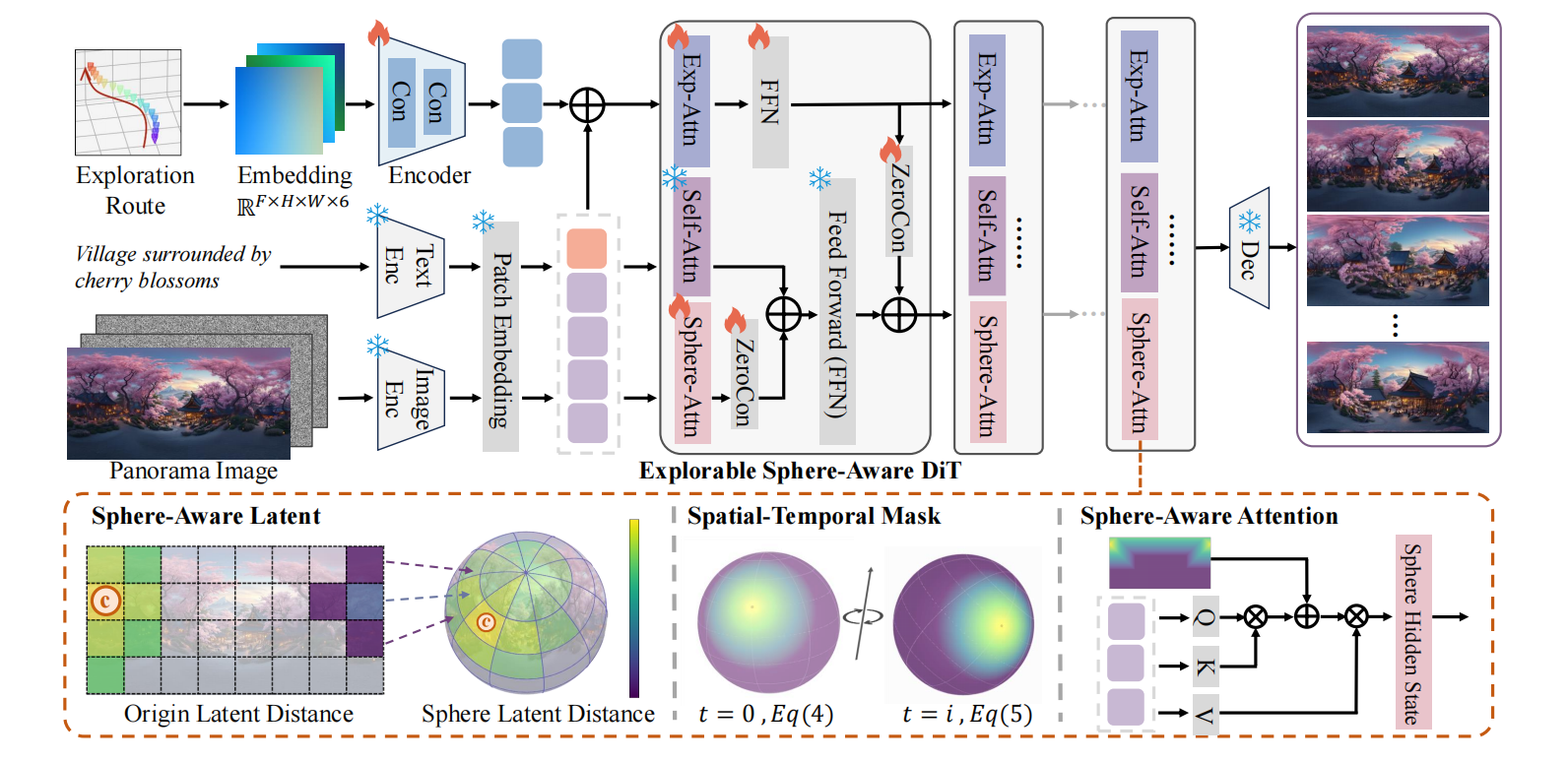

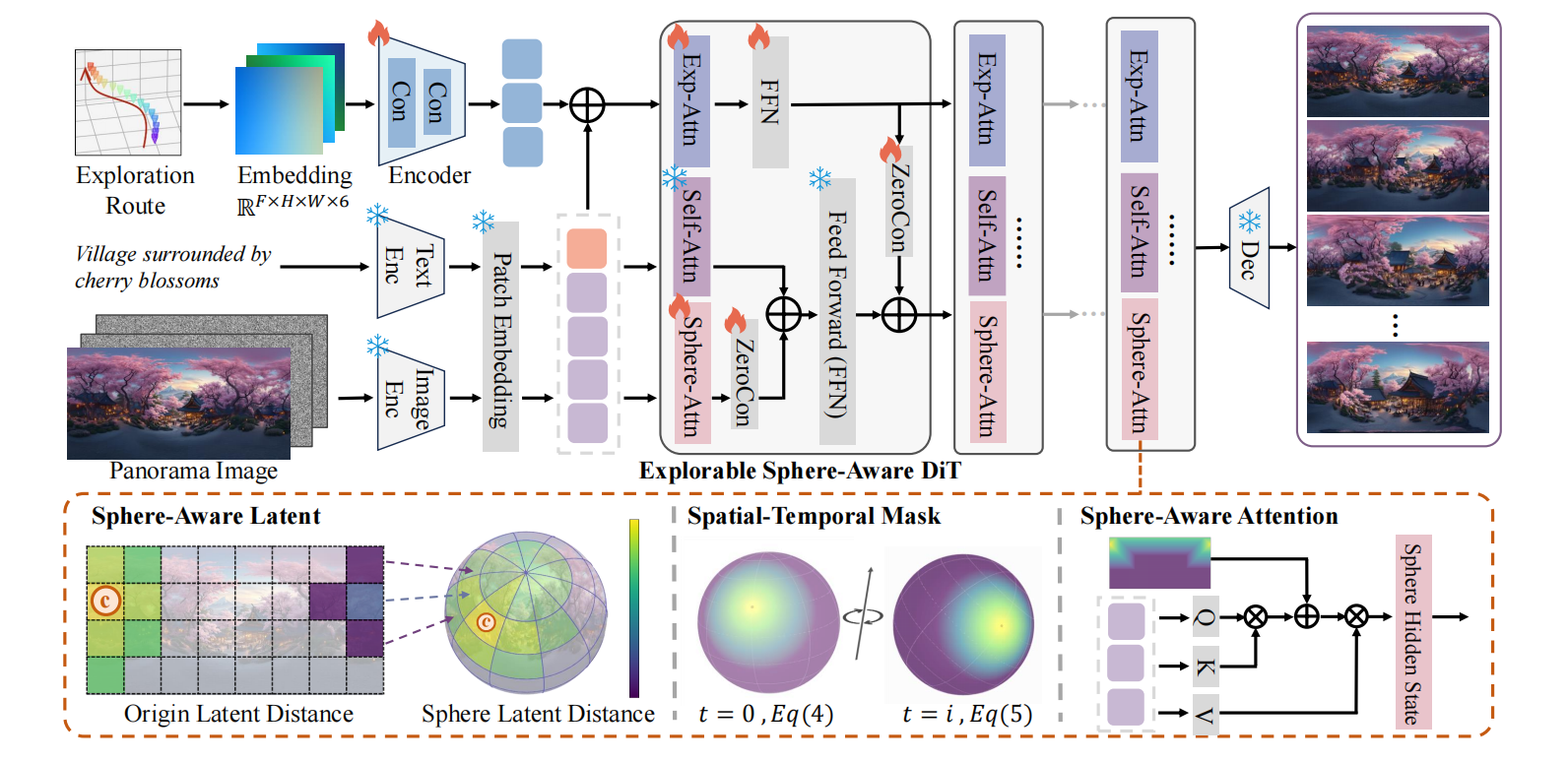

Generating a complete and explorable 360-degree visual world enables a wide range of downstream applications. While prior works have advanced the field, they remain constrained by either narrow field-of-view limitations, which hinder the synthesis of continuous and holistic scenes, or insufficient camera controllability that restricts free exploration by users or autonomous agents. To address this, we propose PanoWorld-X, a novel framework for high-fidelity and controllable panoramic video generation with diverse camera trajectories. Specifically, we first construct a large-scale dataset of panoramic video-exploration route pairs by simulating camera trajectories in virtual 3D environments via Unreal Engine. As the spherical geometry of panoramic data misaligns with the inductive priors from conventional video diffusion, we then introduce a Sphere-Aware Diffusion Transformer architecture that reprojects equirectangular features onto the spherical surface to model geometric adjacency in latent space, significantly enhancing visual fidelity and spatiotemporal continuity. Extensive experiments demonstrate that our PanoWorld-X achieves superior performance in various aspects, including motion range, control precision, and visual quality, underscoring its potential for real-world applications.

@article{yin2025panoworld,

title={PanoWorld-X: Generating Explorable Panoramic Worlds via Sphere-Aware Video Diffusion},

author={Yin, Yuyang and Guo, HaoXiang and Liu, Fangfu and Wang, Mengyu and Liang, Hanwen and Li, Eric and Wang, Yikai and Jin, Xiaojie and Zhao, Yao and Wei, Yunchao},

journal={arXiv preprint arXiv:2509.24997},

year={2025}

}